After several months of reflection, I’ve come to only one conclusion: a cryptographically secure, decentralized ledger is the only solution to making AI safer.

Quelle surprise

There also needs to be an incentive to contribute training data. People should be rewarded when they choose to contribute their data (DeSo is doing this) and even more so for labeling their data.

Get pennies for enabling the systems that will put you out of work. Sounds like a great deal!

All of this may sound a little ridiculous but it’s not. In fact, the work has already begun by the former CTO of OpenSea.

I dunno, that does make it sound ridiculous.

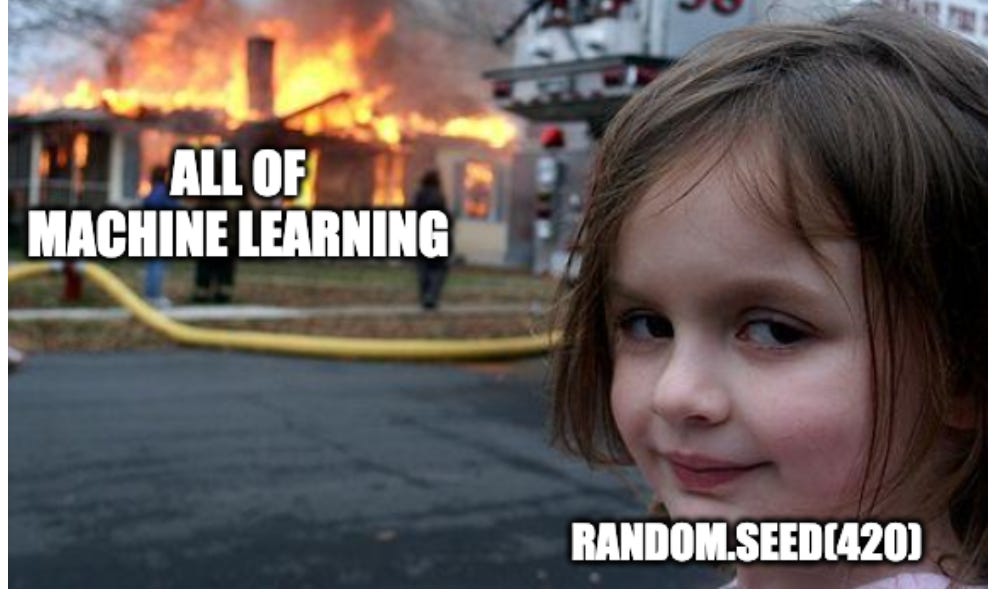

This article is an incredibly deep mine of bad takes, wow

Let’s just build a data intensive application on a foundation where we have none of that data nearby to start! Let’s forget about all other prior distribution models! Let’s make faulty rationalists* and leaps of assumptions!

And then you click through the profile:

Ah, he’s angling to get a priesthood role if this new faith thing happens. Got it.

*e: this was meant to be “rationalisations” and then my phone keyboard did a dumb. I’m gonna leave it that way because it’s funnier