- 21 Posts

- 31 Comments

I respect an article quoting Mike Tyson in the title! Let’s have a read.

2·1 year ago

2·1 year agoDam, I have this issue:

File "/home/st/.pyenv/versions/3.10.12/lib/python3.10/json/encoder.py", line 179, in default raise TypeError(f'Object of type {o.__class__.__name__} ' TypeError: Object of type PosixPath is not JSON serializable [end of output]note: This error originates from a subprocess, and is likely not a problem with pip. ERROR: Failed building wheel for pyworld

1·1 year ago

1·1 year agoI’m going to try this out, but if it’s using that much VRAM I might be out of luck

2·1 year ago

2·1 year agoAsking the model for a recent event:

Thinks for a moment Well, one thing that comes to mind is the recent advancements in space exploration! Did you hear about NASA’s Perseverance rover landing on Mars last month? Excitedly It’s so cool to see how much we’re learning about the red planet and the potential for life beyond Earth! And with private companies like SpaceX and Blue Origin leading the charge in space travel, it’s an exciting time to be alive for anyone who loves science and tech. Her eyes shine with enthusiasm What’s your take on all this, Matt? Are you interested in space exploration at all?

And the date of that was:

https://edition.cnn.com/2021/02/18/world/mars-perseverance-rover-landing-scn-trnd/index.html

So trained on data at least as recently as March 2021

2·1 year ago

2·1 year agoI had issues getting to run, I’ll come back to it. I have other ways to generate bark audio. I found bark to be by far the most natural sounding, it just sounds like it was recorded on a pc mic from 1999. Silero, elevenlabs, sounds monotone to me.

I haven’t tried Tortoise yet, I’ll have to try that!

1·1 year ago

1·1 year agoI ended up downloading all of the 13B SuperHOT models, each one seems great, but I am still trying to work out how to set the parameters correctly

16·1 year ago

16·1 year agoCan’t just search for communities across all instances?

1·1 year ago

1·1 year agoActually considering that we will end up pushing the tutorial off the page with comments, just create a new post in this community for questions!

1·1 year ago

1·1 year agoIf anyone suggests a place to upload the voices I created, I’ll do it and reply to this comment with the download link.

1·1 year ago

1·1 year agoNow you can move your voice over to the right bark folder in ooba to play with. You can test the voice in the notebook if you just keep moving through the code blocks, I’m sure you’ll be able to figure that part out by yourself.

In order for me to be able to select the voice, I actually had to overwrite one of the existing english voices, because my voice didn’t appear in the list.

Overwrite (make backup of original if you want) en_speaker_0.npz in one of these folders:

oobabooga_linux_2/installer_files/env/lib/python3.1/site-packages/bark/assets/prompts/v2oobabooga_linux_2/installer_files/env/lib/python3.1/site-packages/bark/assets/prompts/And select the voice (or the v2/voice) from the list in bark in ooba.

1·1 year ago

1·1 year agoYou now need to get a <10 second wav file as an example to train from. Apparently as little as 4 seconds works too. I won’t cover that in this tutorial.

For mine, I cut some audio from a clip of a woman speaking with very little background noise. You can use https://www.lalal.ai/ to extract voice from background noise, but I didn’t need to do that in this case. I did when using a clip of Megabyte from Reboot talking, which worked… mostly well.

I created an input folder to put my training wav file in:

bark-with-voice-clone/inputNow we can go through this next section of the tutorial:

Run Jupyter Notebook while in the bark folder:

jupyter notebookThis will open a new browser tab wit the Jupyter interface. Click on clone_voice.ipynb

This is very similar to Google Collab where you run blocks of code. Click on the first block of code and click Run. If the code block has a “[*]” next to it, then it is still processing, just give it a minute to finish.

This will take a while and download a bunch of stuff.

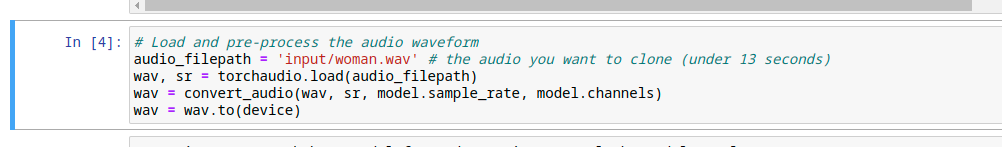

If it manages to finish without errors, run blocks 2 and 3. In block 4, change the line to: filepath = “input/audio.wav”

Make sure you update this block with a valid filepath (to prevent a permissions related error remove the leading “/”) and audio name

outputs will be found in:

bark\assets\prompts

1·1 year ago

1·1 year agoNow for a tricky part:

When I tried to run through voice cloning, I had this error:

--> 153 def auto_train(data_path, save_path='model.pth', load_model: str | None = None, save_epochs=1):154 data_x, data_y = [], []156 if load_model and os.path.isfile(load_model):TypeError: unsupported operand type(s) for |: 'type' and 'NoneType'From file

customtokenizer.pyin directorybark-with-voice-clone/hubert/To solve this, I just plugged this error into chatGPT and made some slight modifications to the code.

At the top I added the import for Union underneath the other imports:

from typing import UnionAnd at line 154 (153 before adding the import above), I modified it as instructed:

def auto_train(data_path, save_path='model.pth', load_model: Union[str, None] = None, save_epochs=1):compare to original line:

def auto_train(data_path, save_path='model.pth', load_model: str | None = None, save_epochs=1):And that solved the issue, we should be ready to go!

1·1 year ago

1·1 year agoNow all the components should be installed. Note that I did not need to install numpy or torch as described in the original post

You can test that it works by running

jupyter notebookAnd you should get an interface like this pop up in your default browser:

2·1 year ago

2·1 year agoDisclaimer I’m using linux, but the same general steps should apply to windows as well

First, create a venv so we keep everything isolated:

python3.9 -m venv bark-with-voice-clone/Yes, this requires ** python 3.9 ** it will not work with python 11

cd bark-with-voice-clonesource bin/activatepip install .When this completes:

pip install jupyterlabpip install notebookI needed to install more than what was listed in the original post, as each time I ran the notebook it would mention another missing thing. This is due to the original user alrady having these components installed, but since we are using a virtual environment, these extras will be required:

Pip install the below:

soundfile

ipywidgets

fairseq

audiolm_pytorch

tensorboardX

Yeah, I often have those dreams where I’m out of place and being judged by everyone who has agreed to be wierd

3·1 year ago

3·1 year agoGoddammit why did you have to say that. Now I can’t just gobble everyone’s movie popcorn.

1·1 year ago

1·1 year agoI would agree, but the rate of innovation in AI is so unpredictable that it could go either way.

0·1 year ago

0·1 year agoI saw an image comparing these with the older versions, and while the size of the vehicle has massively increased, the size of the actual tray has decreased.

Perhaps,

Check out https://github.com/gitmylo/audio-webui